You may possibly in no way have heard the time period “artificial media”— a lot more normally known as “deepfakes”— but our armed forces, legislation enforcement and intelligence businesses unquestionably have. They are hyper-real looking video clip and audio recordings that use synthetic intelligence and “deep” finding out to generate “faux” articles or “deepfakes.” The U.S. govt has grown significantly involved about their opportunity to be made use of to distribute disinformation and commit crimes. That’s simply because the creators of deepfakes have the electric power to make men and women say or do anything, at least on our screens. Most Individuals have no strategy how considerably the technologies has occur in just the past 4 yrs or the danger, disruption and options that come with it.

Deepfake Tom Cruise: You know I do all my personal stunts, certainly. I also do my personal music.

Chris Ume/Metaphysic

This is not Tom Cruise. It can be one particular of a series of hyper-practical deepfakes of the movie star that started showing up on the video clip-sharing application TikTok earlier this yr.

Deepfake Tom Cruise: Hey, what’s up TikTok?

For days people today wondered if they ended up true, and if not, who experienced developed them.

Deepfake Tom Cruise: It is really important.

Last but not least, a modest, 32-year-aged Belgian visual consequences artist named Chris Umé, stepped forward to claim credit rating.

Chris Umé: We considered as extensive as we are making crystal clear this is a parody, we are not executing something to damage his picture. But immediately after a couple of films, we recognized like, this is blowing up we are having millions and tens of millions and tens of millions of views.

Umé states his operate is designed easier due to the fact he teamed up with a Tom Cruise impersonator whose voice, gestures and hair are approximately identical to the authentic McCoy. Umé only deepfakes Cruise’s confront and stitches that onto the actual video and sound of the impersonator.

Deepfake Tom Cruise: That’s where by the magic takes place.

For technophiles, DeepTomCruise was a tipping level for deepfakes.

Deepfake Tom Cruise: Nevertheless obtained it.

Invoice Whitaker: How do you make this so seamless?

Chris Umé: It commences with education a deepfake design, of study course. I have all the facial area angles of Tom Cruise, all the expressions, all the feelings. It can take time to generate a really excellent deepfake design.

Monthly bill Whitaker: What do you mean “schooling the design?” How do you train your computer system?

Chris Umé: “Instruction” means it’s heading to analyze all the photos of Tom Cruise, all his expressions, in comparison to my impersonator. So the computer’s gonna train by itself: When my impersonator is smiling, I’m gonna recreate Tom Cruise smiling, and which is, which is how you “educate” it.

Chris Ume/Metaphysic

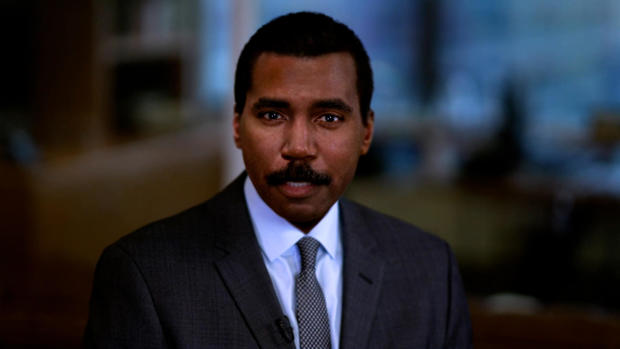

Making use of video clip from the CBS News archives, Chris Umé was equipped to teach his personal computer to master each individual facet of my experience, and wipe absent the decades. This is how I appeared 30 decades ago. He can even remove my mustache. The options are endless and a little frightening.

Chris Umé: I see a whole lot of problems in my do the job. But I you should not brain it, essentially, mainly because I never want to idiot people. I just want to present them what is probable.

Bill Whitaker: You you should not want to fool people today.

Chris Umé: No. I want to entertain persons, I want to raise recognition, and I want

and I want to present exactly where it truly is all going.

Nina Schick: It is with out a doubt a person of the most critical revolutions in the long term of human conversation and perception. I would say it really is analogous to the delivery of the world-wide-web.

Political scientist and engineering specialist Nina Schick wrote one particular of the very first books on deepfakes. She 1st arrived across them 4 yrs back when she was advising European politicians on Russia’s use of disinformation and social media to interfere in democratic elections.

Invoice Whitaker: What was your reaction when you to start with recognized this was achievable and was heading on?

Nina Schick: Effectively, specified that I was coming at it from the point of view of disinformation and manipulation in the context of elections, the actuality that AI can now be made use of to make pictures and online video that are bogus, that glance hyper real looking. I imagined, effectively, from a disinformation standpoint, this is a match-changer.

So considerably, there’s no proof deepfakes have “modified the activity” in a U.S. election, but before this 12 months the FBI set out a notification warning that “Russian [and] Chinese… actors are applying artificial profile visuals” — developing deepfake journalists and media personalities to spread anti-American propaganda on social media.

The U.S. army, legislation enforcement and intelligence businesses have kept a cautious eye on deepfakes for a long time. At a 2019 listening to, Senator Ben Sasse of Nebraska requested if the U.S. is prepared for the onslaught of disinformation, fakery and fraud.

Ben Sasse: When you believe about the catastrophic potential to public believe in and to marketplaces that could appear from deepfake assaults, are we organized in a way that we could probably react speedy adequate?

Dan Coats: We plainly need to have to be additional agile. It poses a significant risk to the United States and something that the intelligence local community requires to be restructured to deal with.

Given that then, technological know-how has continued shifting at an exponential rate although U.S. policy has not. Endeavours by the authorities and large tech to detect artificial media are competing with a community of “deepfake artists” who share their newest creations and methods on line.

Like the internet, the very first place deepfake technological know-how took off was in pornography. The unhappy fact is the the greater part of deepfakes now consist of women’s faces, primarily famous people, superimposed onto pornographic movies.

Nina Schick: The 1st use scenario in pornography is just a harbinger of how deepfakes can be utilized maliciously in a lot of different contexts, which are now starting to crop up.

Bill Whitaker: And they are finding greater all the time?

Nina Schick: Certainly. The extraordinary factor about deepfakes and artificial media is the tempo of acceleration when it comes to the know-how. And by 5 to seven several years, we are essentially on the lookout at a trajectory in which any solitary creator, so a YouTuber, a TikToker, will be capable to produce the exact level of visible results that is only accessible to the most perfectly-resourced Hollywood studio now.

Chris Ume/Metaphysic

The technological know-how guiding deepfakes is synthetic intelligence, which mimics the way people master. In 2014, researchers for the initial time employed personal computers to make reasonable-hunting faces applying something called “generative adversarial networks,” or GANs.

Nina Schick: So you established up an adversarial video game the place you have two AIs combating just about every other to consider and create the best faux synthetic written content. And as these two networks beat every other, a person striving to make the greatest graphic, the other seeking to detect the place it could be much better, you essentially close up with an output that is progressively improving upon all the time.

Schick claims the ability of generative adversarial networks is on total display at a web page known as “ThisPersonDoesNotExist.com”

Nina Schick: Every single time you refresh the site, you can find a new picture of a human being who does not exist.

Each and every is a just one-of-a-type, completely AI-created impression of a human remaining who hardly ever has, and under no circumstances will, stroll this Earth.

Nina Schick: You can see each and every pore on their deal with. You can see each hair on their head. But now consider that technological know-how staying expanded out not only to human faces, in nonetheless photographs, but also to movie, to audio synthesis of people’s voices and that is actually the place we are heading proper now.

Monthly bill Whitaker: This is head-blowing.

Nina Schick: Of course. [Laughs]

Bill Whitaker: What’s the favourable side of this?

Nina Schick: The technological know-how alone is neutral. So just as undesirable actors are, with out a doubt, heading to be using deepfakes, it is also heading to be applied by superior actors. So 1st of all, I would say that you can find a quite compelling situation to be created for the commercial use of deepfakes.

Victor Riparbelli is CEO and co-founder of Synthesia, primarily based in London, 1 of dozens of providers making use of deepfake engineering to renovate online video and audio productions.

Victor Riparbelli: The way Synthesia performs is that we’ve fundamentally replaced cameras with code, and after you happen to be performing with application, we do a lotta points that you wouldn’t be capable to do with a regular digicam. We are still very early. But this is gonna be a fundamental modify in how we build media.

Synthesia will make and sells “digital avatars,” applying the faces of paid actors to provide personalised messages in 64 languages… and permits corporate CEOs to tackle employees abroad.

Snoop Dogg: Did anyone say, Just Try to eat?

Synthesia has also served entertainers like Snoop Dogg go forth and multiply. This elaborate Television commercial for European foodstuff delivery provider Just Try to eat cost a fortune.

Snoop Dogg: J-U-S-T-E-A-T-…

Victor Riparbelli: Just Eat has a subsidiary in Australia, which is named Menulog. So what we did with our technologies was we switched out the term Just Take in for Menulog.

Snoop Dogg: M-E-N-U-L-O-G… Did any person say, “MenuLog?”

Victor Riparbelli: And all of a unexpected they had a localized variation for the Australian current market with out Snoop Dogg possessing to do anything at all.

Monthly bill Whitaker: So he helps make 2 times the funds, huh?

Victor Riparbelli: Yeah.

All it took was 8 minutes of me reading a script on digital camera for Synthesia to generate my synthetic speaking head, finish with my gestures, head and mouth movements. A further business, Descript, used AI to develop a synthetic model of my voice, with my cadence, tenor and syncopation.

Deepfake Invoice Whitaker: This is the consequence. The words and phrases you are hearing ended up by no means spoken by the genuine Monthly bill into a microphone or to a camera. He simply typed the terms into a laptop or computer and they arrive out of my mouth.

It may well appear and sound a minor rough close to the edges proper now, but as the engineering enhances, the opportunities of spinning words and photos out of slender air are unlimited.

Deepfake Bill Whitaker: I am Bill Whitaker. I am Monthly bill Whitaker. I am Invoice Whitaker.

Bill Whitaker: Wow. And the head, the eyebrows, the mouth, the way it moves.

Victor Riparbelli: It’s all synthetic.

Invoice Whitaker: I could be lounging at the seaside. And say, “Individuals– you know, I am not gonna appear in currently. But you can use my avatar to do the perform.”

Victor Riparbelli: Probably in a few many years.

Monthly bill Whitaker: Do not inform me that. I’d be tempted.

Tom Graham: I think it will have a major influence.

The fast advances in artificial media have brought on a virtual gold rush. Tom Graham, a London-based mostly lawyer who designed his fortune in cryptocurrency, lately commenced a corporation called Metaphysic with none other than Chris Umé, creator of DeepTomCruise. Their aim: establish application to allow for any individual to create hollywood-caliber flicks with no lights, cameras, or even actors.

Tom Graham: As the components scales and as the styles turn out to be additional efficient, we can scale up the measurement of that design to be an whole Tom Cruise physique, motion and every thing.

Monthly bill Whitaker: Nicely, converse about disruptive. I imply, are you gonna put actors out of work opportunities?

Tom Graham: I think it is a wonderful matter if you are a perfectly-recognized actor currently simply because you could be ready to enable someone collect data for you to build a version of you in the long term the place you could be acting in films following you have deceased. Or you could be the director, directing your more youthful self in a film or a little something like that.

If you are pondering how all of this is lawful, most deepfakes are regarded protected free speech. Attempts at laws are all in excess of the map. In New York, commercial use of a performer’s synthetic likeness without the need of consent is banned for 40 several years after their dying. California and Texas prohibit deceptive political deepfakes in the lead-up to an election.

Nina Schick: There are so several moral, philosophical grey zones listed here that we definitely have to have to imagine about.

Invoice Whitaker: So how do we as a society grapple with this?

Nina Schick: Just knowing what is actually heading on. Simply because a good deal of people today even now will not know what a deepfake is, what artificial media is, that this is now doable. The counter to that is, how do we inoculate ourselves and recognize that this kind of written content is coming and exists without the need of remaining entirely cynical? Suitable? How do we do it without the need of losing have confidence in in all authentic media?

Which is going to have to have all of us to determine out how to maneuver in a environment wherever seeing is not always believing.

Produced by Graham Messick and Jack Weingart. Broadcast affiliate, Emilio Almonte. Edited by Richard Buddenhagen.