Google introduced a breakthrough technology referred to as Serene that speeds up substantial language types (like GPT-3 and LaMDA) without compromising performance ranges.

More substantial Schooling Info Is Far better But Comes With a Charge

Huge Language Types (LLMs) prepare on big quantities of knowledge.

Coaching the language types on much larger amounts of knowledge benefits in the product finding out new talents that are not constantly planned for.

For instance, introducing far more coaching details to a language product can unexpectedly result in it gaining the potential to translate concerning diverse languages, even although it wasn’t skilled to do that.

These new qualities are called emergent qualities, abilities that aren’t necessarily prepared for.

A distinctive research paper (PDF) about emergent abilities states:

“Although there are dozens of illustrations of emergent qualities, there are at this time couple of powerful explanations for why this kind of talents emerge in the way they do.”

They cannot describe why different abilities are acquired.

But it is effectively recognised that scaling up the total of information for training the device lets it to acquire far more skills.

The downside of scaling up the teaching information is that it requires more computational electrical power to produce an output, which helps make the AI slower at the time it is producing a textual content output (a moment that is termed the “inference time”).

So the trade-off with creating an AI smarter with much more details is that the AI also results in being slower at inference time.

Google’s new research paper (Confident Adaptive Language Modeling PDF) describes the issue like this:

“Recent improvements in Transformer-based mostly massive language styles (LLMs) have led to major functionality enhancements across several responsibilities.

These gains appear with a drastic improve in the models’ measurement, potentially main to sluggish and highly-priced use at inference time.”

Self-confident Adaptive Language Modeling (Tranquil)

Scientists at Google arrived upon an appealing alternative for rushing up the language designs while also keeping higher functionality.

The alternative, to make an analogy, is somewhat like the variance concerning answering an uncomplicated problem and solving a more tough one.

An straightforward problem, like what color is the sky, can be answered with very little considered.

But a difficult solution requires one to stop and think a minimal a lot more to come across the reply.

Computationally, massive language types do not make a distinction between a hard component of a textual content generation task and an straightforward component.

They crank out textual content for both of those the straightforward and tricky parts utilizing their whole computing energy at inference time.

Google’s remedy is called Self-assured Adaptive Language Modeling (Serene).

What this new framework does is to dedicate much less methods to trivial portions of a textual content technology endeavor and commit the complete energy for far more difficult components.

The investigate paper on Quiet states the trouble and alternative like this:

“Recent improvements in Transformer-primarily based big language designs (LLMs) have led to important efficiency advancements across quite a few duties.

These gains occur with a drastic raise in the models’ sizing, perhaps primary to sluggish and highly-priced use at inference time.

In observe, nevertheless, the series of generations created by LLMs is composed of varying amounts of issue.

Even though certain predictions truly reward from the models’ comprehensive ability, other continuations are far more trivial and can be solved with minimized compute.

…While big styles do improved in typical, the identical quantity of computation could not be necessary for every enter to realize very similar functionality (e.g., based on if the enter is uncomplicated or really hard).”

What is Google Serene and Does it Do the job?

Tranquil operates by dynamically allocating assets depending on the complexity of the person section of the activity, using an algorithm to predict regardless of whether a thing requirements complete or partial means.

The study paper shares that they tested the new procedure for many natural language processing duties (“text summarization, equipment translation, and question answering”) and identified that they ended up ready to speed up the inference by about a component of a few (300{4224f0a76978c4d6828175c7edfc499fc862aa95a2f708cd5006c57745b2aaca}).

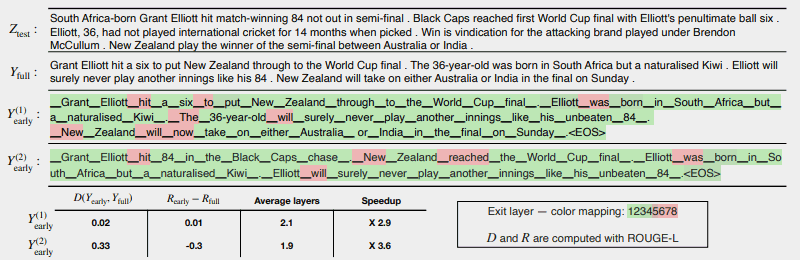

The pursuing illustration exhibits how well the Relaxed process functions.

The number of areas in red show where the device experienced to use its whole capacity on that section of the activity.

The spots in green are where the device only employed significantly less than 50 percent capability.

Crimson = Comprehensive Potential/Green = Significantly less Than 50 percent Capacity

This is what the study paper states about the higher than illustration:

“CALM accelerates the technology by early exiting when doable, and selectively working with the comprehensive decoder’s capacity only for couple tokens, demonstrated in this article on a CNN/DM instance with softmax-dependent self-assurance evaluate. Y (1) early and Y (2) early use diverse self-confidence thresholds for early exiting.

Bellow (sic) the text, we report the calculated textual and hazard regularity of each individual of the two outputs, together with performance gains.

The hues signify the quantity of decoding layers utilized for just about every token—light eco-friendly shades reveal fewer than fifty percent of the whole levels.

Only a couple selected tokens use the full ability of the model (coloured in purple), even though for most tokens the product exits soon after one or several decoding layers (colored in eco-friendly).”

The scientists concluded the paper by noting that applying Serene involves only minimum modifications in get to adapt a big language model to become faster.

This exploration is important due to the fact it opens the door to building more elaborate AI types that are trained on substantially larger info sets without the need of suffering from slower pace even though retaining a large efficiency amount.

Yet it could be possible that this process can also benefit massive language designs that are trained on fewer data as perfectly.

For illustration, InstructGPT types, of which ChatGPT is a sibling product, are experienced on close to 1.3 billion parameters but are still able to outperform products that are experienced on significantly far more parameters.

The scientists observed in the conclusion:

“Overall, our finish adaptive compute framework for LMs calls for small modifications to the fundamental model and allows efficiency gains though fulfilling arduous high-quality ensures for the output.”

This information and facts about this analysis paper was just revealed on Google’s AI website on December 16, 2022. The research paper by itself is dated October 25, 2022.

It will be attention-grabbing to see if this technology would make it way into huge language designs of the near future.

Study Google’s website post:

Accelerating Text Generation with Self-confident Adaptive Language Modeling (Tranquil)

Study the Analysis Paper:

Assured Adaptive Language Modeling (PDF)

Featured image by Shutterstock/Grasp1305