There is concern about the lack of an easy way to opt out of owning one’s material used to coach substantial language types (LLMs) like ChatGPT. There is a way to do it, but it is neither simple nor assured to get the job done.

How AIs Learn From Your Material

Big Language Types (LLMs) are properly trained on knowledge that originates from a number of sources. Several of these datasets are open supply and are freely made use of for schooling AIs.

In common, Massive Language Versions use a huge wide variety of sources to teach from.

Illustrations of the sorts of sources utilized:

- Wikipedia

- Govt court docket documents

- Textbooks

- Emails

- Crawled internet sites

There are basically portals and websites providing datasets that are offering absent broad amounts of details.

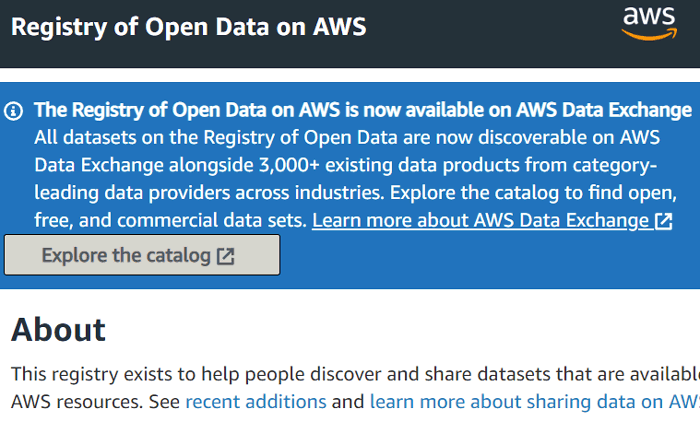

One particular of the portals is hosted by Amazon, offering 1000’s of datasets at the Registry of Open Information on AWS.

Screenshot from Amazon, January 2023

Screenshot from Amazon, January 2023The Amazon portal with 1000’s of datasets is just one portal out of a lot of some others that contain a lot more datasets.

Wikipedia lists 28 portals for downloading datasets, such as the Google Dataset and the Hugging Deal with portals for acquiring thousands of datasets.

Datasets Used to Teach ChatGPT

ChatGPT is based on GPT-3.5, also known as InstructGPT.

The datasets made use of to teach GPT-3.5 are the exact utilised for GPT-3. The big variance amongst the two is that GPT-3.5 utilised a approach identified as reinforcement studying from human feed-back (RLHF).

The five datasets made use of to train GPT-3 (and GPT-3.5) are explained on web page 9 of the study paper, Language Types are Couple-Shot Learners (PDF)

The datasets are:

- Widespread Crawl (filtered)

- WebText2

- Textbooks1

- Publications2

- Wikipedia

Of the 5 datasets, the two that are based on a crawl of the Online are:

About the WebText2 Dataset

WebText2 is a personal OpenAI dataset made by crawling backlinks from Reddit that had 3 upvotes.

The thought is that these URLs are trustworthy and will contain high-quality material.

WebText2 is an extended variation of the authentic WebText dataset produced by OpenAI.

The first WebText dataset experienced about 15 billion tokens. WebText was used to train GPT-2.

WebText2 is slightly larger sized at 19 billion tokens. WebText2 is what was made use of to practice GPT-3 and GPT-3.5

OpenWebText2

WebText2 (developed by OpenAI) is not publicly available.

Having said that, there is a publicly accessible open-supply edition termed OpenWebText2. OpenWebText2 is a public dataset developed making use of the same crawl patterns that presumably present identical, if not the similar, dataset of URLs as the OpenAI WebText2.

I only mention this in circumstance somebody would like to know what’s in WebText2. 1 can down load OpenWebText2 to get an plan of the URLs contained in it.

A cleaned up variation of OpenWebText2 can be downloaded in this article. The uncooked edition of OpenWebText2 is offered right here.

I could not uncover information about the consumer agent used for either crawler, perhaps it’s just identified as Python, I’m not positive.

So as much as I know, there is no user agent to block, though I’m not 100{4224f0a76978c4d6828175c7edfc499fc862aa95a2f708cd5006c57745b2aaca} selected.

Yet, we do know that if your internet site is linked from Reddit with at least a few upvotes then there is a great possibility that your web site is in equally the shut-supply OpenAI WebText2 dataset and the open up-supply variation of it, OpenWebText2.

Much more data about OpenWebText2 is in this article.

Prevalent Crawl

A single of the most usually utilised datasets consisting of Online material is the Typical Crawl dataset that is created by a non-financial gain firm termed Typical Crawl.

Prevalent Crawl facts arrives from a bot that crawls the whole Net.

The information is downloaded by companies wishing to use the info and then cleaned of spammy internet sites, etc.

The title of the Widespread Crawl bot is, CCBot.

CCBot obeys the robots.txt protocol so it is attainable to block Common Crawl with Robots.txt and stop your website knowledge from building it into a different dataset.

On the other hand, if your web page has previously been crawled then it is possible currently integrated in numerous datasets.

However, by blocking Common Crawl it is probable to choose out your web page information from becoming integrated in new datasets sourced from more recent Popular Crawl datasets.

This is what I intended at the pretty commencing of the report when I wrote that the procedure is “neither straightforward nor guaranteed to function.”

The CCBot User-Agent string is:

CCBot/2.

Increase the pursuing to your robots.txt file to block the Popular Crawl bot:

Person-agent: CCBot Disallow: /

An more way to ensure if a CCBot person agent is legit is that it crawls from Amazon AWS IP addresses.

CCBot also obeys the nofollow robots meta tag directives.

Use this in your robots meta tag:

A Consideration Just before You Block any Bots

Quite a few datasets, together with Popular Crawl, could be utilised by providers that filter and categorize URLs in order to make lists of web-sites to focus on with advertising.

For instance, a firm named Alpha Quantum offers a dataset of URLs categorized applying the Interactive Advertising and marketing Bureau Taxonomy. The dataset is helpful for AdTech marketing and contextual promotion. Exclusion from a database like that could bring about a publisher to shed potential advertisers.

Blocking AI From Employing Your Information

Look for engines allow internet sites to opt out of getting crawled. Common Crawl also allows opting out. But there is at the moment no way to get rid of one’s web page content from present datasets.

In addition, investigation experts really don’t seem to be to present web site publishers a way to decide out of becoming crawled.

The report, Is ChatGPT Use Of World wide web Information Good? explores the subject of whether it’s even ethical to use website details with out permission or a way to opt out.

Several publishers may perhaps take pleasure in it if in the close to potential, they are provided a lot more say on how their articles is employed, particularly by AI solutions like ChatGPT.

Whether or not that will materialize is mysterious at this time.

Much more assets:

Featured image by Shutterstock/ViDI Studio